Calculating the complexity of an algorithm is a measure of its performance. There are two types of complexity:

- Spatial complexity: quantifies memory usage

- Time complexity: quantifies the speed of execution

Temporal complexity

The objective of a temporal algorithmic complexity calculation is to be able to compare the efficiency of algorithms solving the same problem. In a given situation this, therefore, makes it possible to establish which of the available algorithms is the most optimal.

For large data, the difference between the execution times of two algorithms with the same purpose can be of the order of several days!

Performing a time complexity calculation amounts to counting the number of elementary operations (assignment, arithmetic or logical calculation, comparison, etc.) performed by the algorithm.

Since it is only a question of comparing algorithms, the rules of this calculation must be independent:

- The programming language used;

- The processor of the computer on which the code will be executed;

- Any compiler used. For the sake of simplicity, we will assume that all the elementary operations are at equal cost, i.e. 1 "unit" of time.

Example: a = b * 3: 1 multiplication + 1 assignment = 2 “units”

The time complexity of an algorithm will be expressed by a function, noted T (for Time), which depends on:

- The size of the data passed in parameters: the larger this data, the more elementary operations will be needed to process it. We denote by n the number of data to be processed.

- Of the data itself, of the way in which the different values that constitute it are distributed. for example, if we perform a sequential search for an element in an unsorted list, we go through the elements one by one until we find, or not, the one we are looking for. This course can stop at the beginning if the first element is "the right one". But it may also be necessary to browse the entire list if the element sought is in the last position, or even does not appear there.

This remark leads us to clarify a little our definition of time complexity. Strictly speaking, we can indeed distinguish two forms of time complexity:

- complexity in the best of cases: this is the most favorable situation,

for example: search for an element located at the first position of a list

- complexity in the worst case: this is the most unfavorable situation,

for example: searching for an element in a list when it is not there

We will most often calculate the complexity in the worst case because it is the most relevant. It is always better to assume the worst.

Magnitude

To compare algorithms, it is not necessary to use the function T, but only the asymptotic order of magnitude, noted O (“big O”).

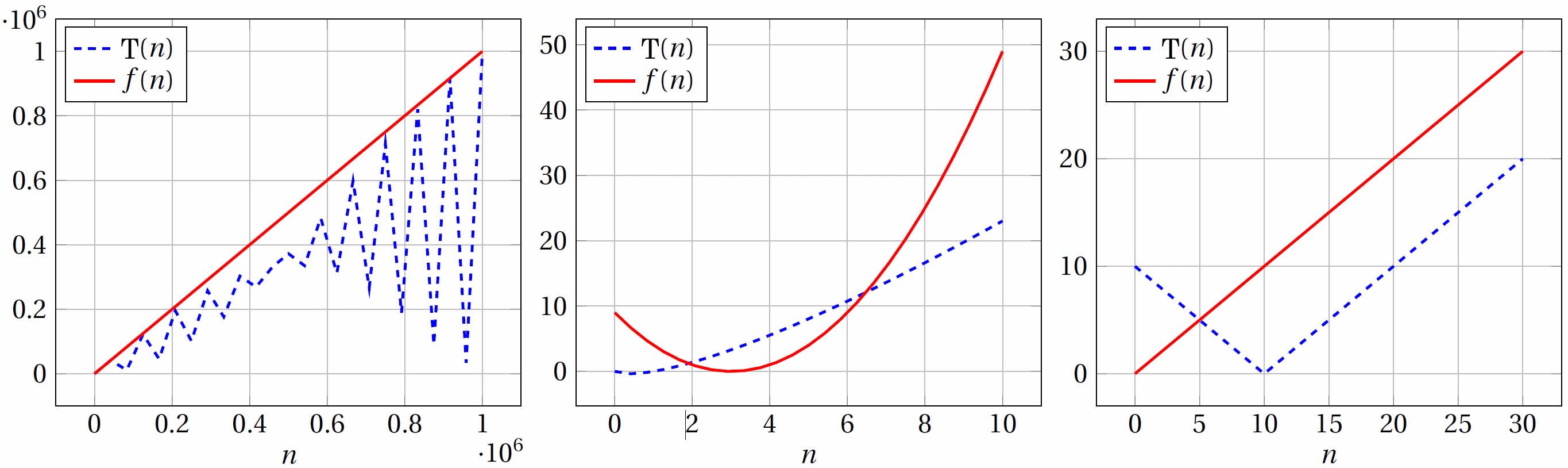

A function T(n) is in O(f(n)) (“in big O of f(n)“) if:

∃n0∈N,∃c∈R+,∀n∈R+,n≥n0⇒|T(n)|≤c|f(n)|

In other words :

T(n) is in O(f(n)) if there is a threshold n0 from which the function T is always dominated by the function f, up to a fixed multiplicative constant c.

Examples:

T1(n)=7=O(1) T2(n)=12n+5=O(n) T3(n)=4n2+2n+6=O(n2) T4(n)=2+(n−1)×5=O(n)

Complexity classes

O = Type of complexity O(1) = constant O(log(n)) = logarithmic O(n) = linear O(n×log(n)) = quasi-linear O(n2) = quadratic O(n3) = Cubic O(2n) = exponential O(n!) = factorial

factorial def(n):

fact = 1

i = 2

while i <= n:

fact = fact * i

i = i + 1

return fact

assignment: 1

assignment: 1

iterations: at most n − 1

comparison: 1

multiplication + assignment: 2

addition + assignment: 2